Defensive Statistics

Normal or approximately normal subjects are less useful objects of research than their pathological counterparts.1

In the realm of software development, reliability is crucial. This is especially true when creating systems that automatically analyze performance measurements to maintain optimal application performance. To achieve the desired level of reliability, we need a set of statistical approaches that provide accurate and trustworthy results. These approaches must work even when faced with varying input data sets and multiple violated assumptions, including malformed and corrupted values. In this blog post, I introduce “Defensive Statistics” as an appropriate methodology for tackling this challenge.

When working with an automatic analysis system, it is vital to establish a statistical protocol in advance. This should be done without any prior knowledge of the target data. Additionally, we have no control over the measurements gathered and reported, as these components are maintained by other developers. For the system to be considered reliable, it must consistently provide accurate reports on any set of input values. This must hold true regardless of data malformation or distortion. Ensuring a low Type I error rate is essential for the trustworthiness of our system. Unfortunately, most classic statistical methods have unsupported pathological corner cases that arise outside the declared assumptions.

To address these challenges, our statistical methods must meet several key requirements:

- Incorporate nonparametric statistical methods, as relying on the normal distribution or other parametric models may lead to incorrect results.

- Use robust statistics to effectively handle extreme outliers that appear in the case of heavy-tailed distributions.

- Work with various data types, including discrete distributions, continuous-discrete mixtures, and the Dirac delta distribution with zero variance. Even if the underlying distribution is supposed to be continuous, the discretization effect may appear due to the limited resolution of measurement devices.

- Anticipate and account for multimodality in real-life distributions, as well as low-density regions in the middle of the distribution (even around the median).

- Prioritize small sample sizes, considering the expense and time constraints associated with data gathering.

- Support weighted samples to address non-homogenous data and allow proper aggregation of measurements from various repository revisions.

While none of the corner cases should lead to system dysfunction, we care about statistical efficiency for the majority of input datasets, in which the typical assumptions are satisfied. Therefore, methods of protection from the corner cases should not lead to tangible efficiency loss.

Since there isn’t a pre-existing term that encapsulates these requirements, I propose the term “Defensive Statistics.” This term is inspired by defensive programming, which also prioritizes reliable program execution in cases of invalid, corrupted, or unexpected input. The main objectives of defensive statistics are:

- Always provide valid and reliable results for any input data set, no matter how many original assumptions are violated.

- Maximize statistical efficiency when most input data sets follow a typical set of assumptions.

Manual one-time investigations may not require a high level of reliability since errors can be addressed by hand. However, methods of defensive statistics are essential for the automatic analysis of a wide range of uncontrollable inputs. By incorporating the discussed principles into our work, we can ensure that our software consistently delivers accurate and trustworthy results. This remains true even when faced with complex and unpredictable data sets. Adopting defensive statistics leads to more resilient, reliable, and efficient systems in the constantly evolving world of software development.

Recently, I was rereading Robust Statistics and I found this quote about the difference between robust and nonparametric statistics (page 9):

Robust statistics considers the effects of only approximate fulfillment of assumptions, while nonparametric statistics makes rather weak but nevertheless strict assumptions (such as continuity of distribution or independence).

This statement may sound obvious. Unfortunately, facts that are presumably obvious in general are not always so obvious at the moment. When a researcher works with specific types of distributions for a long time, the properties of these distributions may be transformed into implicit assumptions. This implicitness can be pretty dangerous. If an assumption is explicitly declared, it can become a starting point for a discussion on how to handle violations of this assumption. The implicit assumptions are hidden and therefore conceal potential issues in cases when the collected data do not meet our expectations.

A switch from parametric to nonparametric methods is sometimes perceived as a rejection of all assumptions. Such a perception can be hazardous. While the original parametric assumption is actually neglected, many researchers continue to act like the implicit consequences of this assumption are still valid.

Since normality is the most popular parametric assumption, I would like to briefly discuss connected implicit assumptions that are often perceived not as non-validated hypotheses, but as essential properties of the collected data.

- Light-tailedness.

Assumption: the underlying distribution is light-tailed; the probability of observing extremely large outliers is negligible. Fortunately, the biggest part of robust statistics is trying to address violations of these assumptions. - Unimodality.

Assumption: the distribution is unimodal; no low-density regions in the middle part of the distribution are possible. When such regions appear (e.g., due to multimodality), most classic estimators may stop behaving acceptably. In such cases, we should evaluate the resistance to the low-density regions of the selected estimators. - Symmetry.

Assumption: the distribution (including the tails) is absolutely symmetric; the skewness is zero. The side effects of assumption violation are not always tangible. E.g., the classic Tukey fences are implicitly designed to catch outliers in the symmetric case. While this method is still applicable to highly skewed distributions, the discovered outliers may significantly differ from our expectations. - Continuity.

Assumption: the underlying distribution continues and therefore, no tied values in the collected data are possible. Even if the true distribution is actually a continuous one, discretization may appear due to the limited resolution of the selected measurement devices. - Non-degeneracy.

Assumption: in any collected sample, we have at least two different observations. In real life, some distributions can degenerate to the Dirac delta function, which leads to zero dispersion. - Unboundness.

Assumption: all values from $-\infty$ to $\infty$ are possible. This assumption may significantly reduce the accuracy of the used estimator since they do not account for the actual domain of the underlying distribution. The classic example is the kernel density estimation based on the normal kernel that always returns the probability density function defined on $[-\infty; \infty]$. - Independency.

Assumption: observations are independent of each other. In real life, the observations can have hidden correlations that are so hard to evaluate that researchers prefer to pretend that all the measurements are independent. - Stationarity.

Assumption: the properties of the distribution do not change over time. The old Greek saying goes “You can’t step in the same river twice” (attributed to Heraclitus of Ephesus). But can you draw a sample from the same distribution twice? For the sake of simplicity, researchers often tend to neglect variable external factors that affect the distribution. However, the real world is always a fluke. - Plurality.

Assumption: the collected samples always contain at least two observations. Sometimes, we have to deal with samples that contain a single element (e.g., in sequential analysis). In such a case, equations that use $n-1$ in a denominator stop being valid.

I believe that it is important to think and speculate about the behavior of the statistical methods in corner cases of violated assumptions. Clear explanations of how to handle these corner cases for each statistical approach help to implement reliable automatic analysis procedures following the principles of defensive statistics.

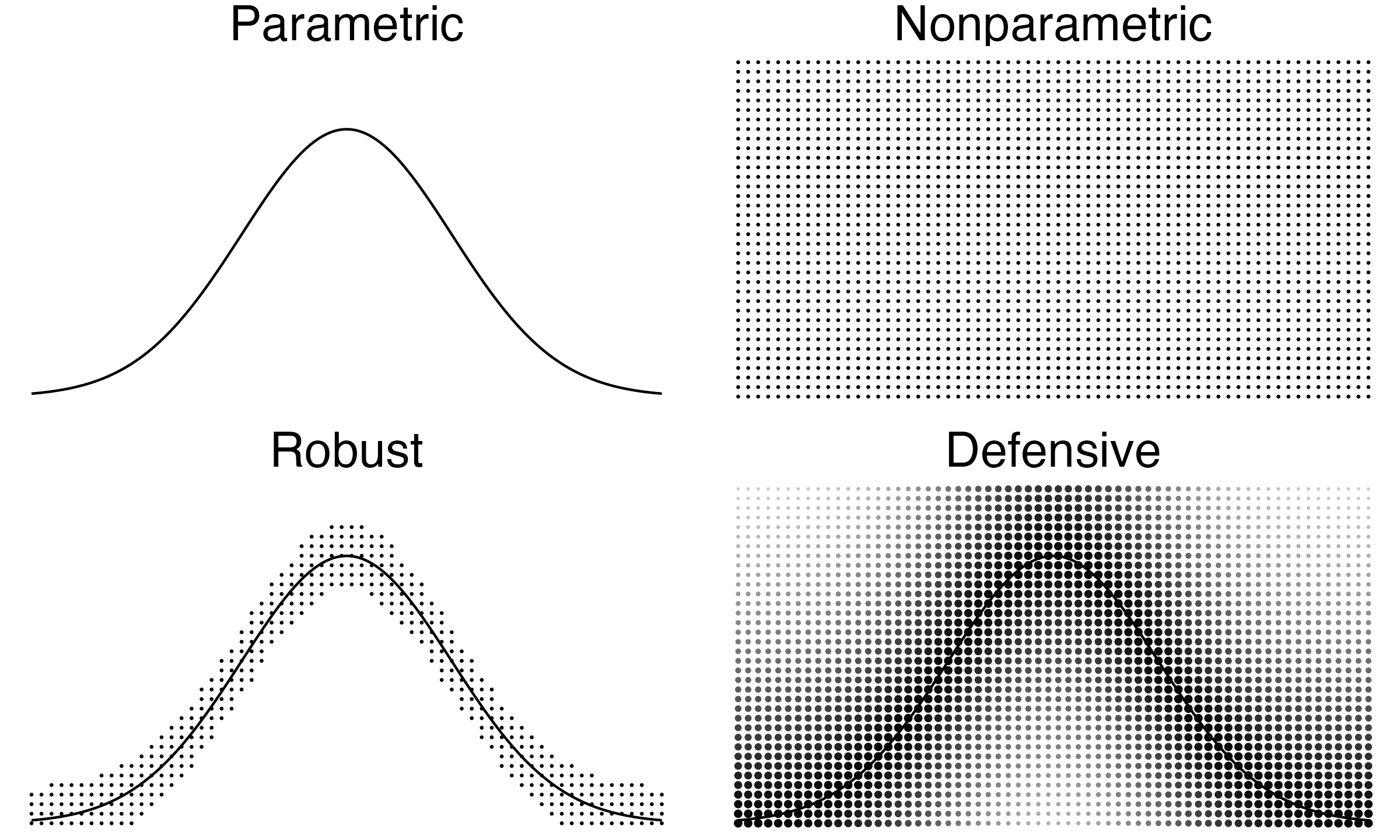

Recently, I started writing about defensive statistics. The methodology allows having parametric assumptions, but it adjusts statistical methods so that they continue working even in the case of huge deviations from the declared assumptions. This idea sounds quite similar to nonparametric and robust statistics. In this post, I briefly explain the difference between different statistical methodologies.

- Parametric statistics

Parametric statistics always have a strong parametric assumption about the distribution form. The most common example is the normality assumptions so that all the corresponding methods heavily rely on the fact that the true target distribution is a normal one. The methods of parametric statistics stop working even in the case of small deviations from the declared assumptions. The classic examples of relevant problems are the sample mean and the sample standard deviation which do not provide reliable estimations in the case of extreme outliers caused by a heavy-tailed distribution. - Nonparametric statistics

While pure parametric methods are well-known and well-developed, they are not always applicable in practice. Unfortunately, perfect parametric distributions are mental constructions: they exist only in our imagination, but not in the real world. That is why the usage of parametric methods in their classic form is rarely a smart choice. Fortunately, we have a handy alternative: the nonparametric statistic. This methodology rejects consider any parametric assumptions. While some implicit assumptions, like continuity or independence, still may be required, nonparametric statistics avoid considering any parametric models. Such methods are great when we have no prior knowledge about target distributions. However, if the majority of collected data samples follow some patterns (which can be expressed in the form of parametric assumptions), nonparametric statistics do not look advantageous compared to the parametric methods because it is not capable of exploiting this prior knowledge to increase statistical efficiency. - Robust statistics

Unlike parametric statistics, robust methods allow slight deviations from the declared parametric model. This gives reliable results even if some of the collected measurements do not meet our expectations. Unlike nonparametric statistics, robust methods do not fully reject parametric assumptions. This gives higher statistical efficiency compared to classic nonparametric statistics. Unfortunately, classic robust statistics have issues in the case of huge deviations from the assumptions. Usually, robust statistical methods are not capable of handling extreme corner cases, which also can arise in practice. - Defensive statistics

Defensive statistics tries to get benefits from all the above methodologies. Like parametric statistics, it accepts the fact that the majority of the collected data samples may follow specific patterns. If we express these patterns in the form of assumptions, we can significantly increase statistical efficiency in most cases. Like robust statistics, it accepts small deviations from the assumptions and continues to provide reliable results in the corresponding cases. Like nonparametric statistics, it accepts huge deviations from the assumptions and tries to continue to provide reasonable results even in the cases of malformed or corrupted data. However, unlike nonparametric statistics, it doesn’t stop acknowledging the original assumptions. Therefore, defensive statistics ensures not only high reliability but also high efficiency in the majority of cases.

Notes

The front picture was inspired by Figure 5 (Page 7) from Robust Statistics.

In the old times of applied statistics existence, all statistical experiments used to be performed by hand. In manual investigations, an investigator is responsible not only for interpreting the research results but also for the applicability validation of the used statistical approaches. Nowadays, more and more data processing is performed automatically on enormously huge data sets. Due to the extraordinary number of data samples, it is often almost impossible to verify each output individually using human eyes. Unfortunately, since we typically have no full control over the input data, we cannot guarantee certain assumptions that are required by classic statistical methods. These assumptions can be violated not only due to real-life phenomena we were not aware of during the experiment design stage, but also due to data corruption. In such corner cases, we may get misleading results, wrong automatic decisions, unacceptably high Type I/II error rates, or even a program crash because of a division by zero or another invalid operation. If we want to make an automatic analysis system reliable and trustworthy, the underlying mathematical procedures should correctly process malformed data.

The normality assumption is probably the most popular one. There are well-known methods of robust statistics that focus only on slight deviations from normality and the appearance of extreme outliers. However, it is only a violation of one specific consequence from the normality assumption: light-tailedness. In practice, this sub-assumption is often interpreted as “the probability of observing extremely large outliers is negligible.” Meanwhile, there are other implicit derived sub-assumptions: continuity (we do not expect tied values in the input samples), symmetry (we do not expect highly-skewed distributions), unimodality (we do not expect multiple modes), nondegeneracy (we do not expect all sample values to be equal), sample size sufficiency (we do not expect extremely small samples like single-element samples), and others.

Some statistical methods may handle violations of some of these assumptions. However, most popular approaches still have an applicability domain and a set of unsupported cases. Some limitations may be explicitly declared (e.g., “We assume that the underlying distribution is continuous and no tied values are possible”). Other constraints on the input data can be too implicit so that they have no mention in the relevant papers (e.g., there are no remarks like “We assume that the underlying distribution is non-degenerate and the dispersion is non-zero”). The boundary between supported and unsupported cases is not always clear: we can have a grey area in which the chosen statistical method is still applicable, but its statistical efficiency noticeably declines.

Methods of nonparametric and robust statistics mitigate some of these issues, but not all of them. Therefore, I develop the concept of defensive statistics in order to handle all possible violations of implicit and explicit assumptions. In classic statistics, there are a lot of powerful methods, but their hidden limitations don’t always get proper attention. When everything goes smoothly, it may be challenging to force yourself to focus on corner cases. However, if we want to achieve a decent level of reliability and avoid potential problems, preparation should be performed in advance. This may require a mindset shift: we should proactively search for all the implicit assumptions and plan our strategy for cases, in which one or several of these assumptions are violated.

See also

Making decisions under model misspecification · 2020 · Simone Cerreia-Vioglio et al.