Refined Mann–Whitney U Test

The (Wilcoxon–)Mann–Whitney $U$ test is one of the most popular non-parametric tests. It’s almost as efficient as the Student’s $t$-test under normality and more efficient under the majority of non-normal distributions (see Asymptotic Statistics, p198). This makes it a pragmatic test choice for comparing two independent samples.

In mann1947, H. B. Mann and D. R. Whitney suggested a naïve implementation of $p$-value calculation. This includes slow exact implementation and inaccurate normal approximation. In fix1955, E. Fix and J. L. Hodges described how to significantly improve the approximation precision using the Edgeworth expansion. In loeffler1982, A. Löffler proposed a fast and computationally efficient exact implementation. Still, most contemporary statistical packages use the classic slow and inaccurate methods from 1947. In this article, we review the fast and accurate way to calculate the Mann–Whitney $U$ test $p$-value and provide a reference implementation.

To compares two samples $\mathbf{x} = ( x_1, x_2, \ldots, x_n )$ and $\mathbf{y} = ( y_1, y_2, \ldots, y_m )$, we consider the $U$ statistic:

$$ U(\mathbf{x}, \mathbf{y}) = \sum_{i=1}^n \sum_{j=1}^m S(x_i, y_j),\quad S(a,b) = \begin{cases} 1, & \text{if } a > b, \\ 0.5, & \text{if } a = b, \\ 0, & \text{if } a < b. \end{cases} $$Essentially, it’s the number of pairs $(x_i, y_j)$ where $x_i > y_j$. The Mann–Whitney $p$-value is the probability of obtaining $U$ at least as extreme as the observed value, under the assumption that both $\mathbf{x}$ and $\mathbf{y}$ came from the same distribution.

Calculation of the exact $p$-value is a simple combinatorial problem. Unfortunately, the naïve approach from mann1947 is computationally inefficient, requires too much memory, and, therefore, is not suitable for large samples. The proposed alternative is the normal approximation, which gives reasonable results for the middle part of the distribution, but it’s highly inaccurate for the tails. Let’s consider the below R and Python snippets that calculate the exact and approximated $p$-values of the Mann–Whitney $U$ test for two slightly overlapped samples of size 15:

x <- c(13, 15, 16, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30)

y <- c(1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 14, 17, 18)

wilcox.test(x, y, alternative = "greater", exact = TRUE)$p.value

wilcox.test(x, y, alternative = "greater", exact = FALSE)$p.value

import numpy as np

from scipy.stats import mannwhitneyu

x = np.array([13, 15, 16, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30])

y = np.array([1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 14, 17, 18])

print(mannwhitneyu(x, y, alternative="greater", method="exact").pvalue)

print(mannwhitneyu(x, y, alternative="greater", method="asymptotic").pvalue)

In R 4.3.3 and SciPy 1.14.0, which use the normal approximation, we get the approximated value 23 times greater than the exact one:

0.000000290 # Exact Value

0.000006647 # Normal Approximation

For samples of size 50, the difference is up to $3.5\cdot 10^{11}$ times:

wilcox.test(51:100, 1:50, alternative = "greater", exact = TRUE)$p.value

wilcox.test(51:100, 1:50, alternative = "greater", exact = FALSE)$p.value

0.000000000000000000000000000009911653 # Exact Value

0.000000000000000003533036 # Normal Approximation

The approximation precision can be highly improved using the Edgeworth expansion from fix1955. For the first example, it gives the $p$-value of $0.000000294$, which is $1\%$ away from the true value. The applicability of the exact calculation can be extended using Löffler’s implementation from loeffler1982. Let’s review how to implement the Mann–Whitney $U$ test in a more precise and efficient way.

Löffler’s Exact

The original approach from mann1947 introduces $p_{n,m}(u)$ as the number of ways to get $U = u$ for samples of sizes $n$ and $m$ considering the relative order of the elements. It can be defined via the following equation:

$$ p_{n,m}(u) = p_{n-1,m}(u - m) + p_{n,m-1}(u). $$The straightforward implementation of this recurrence relation requires $\mathcal{O}(n^2m^2)$ time and memory. This makes it impractical for large samples.

In loeffler1982, Andreas Löffler derives an alternative recurrent equation:

$$ p_{n,m}(u) = \frac{1}{u} \sum_{i=0}^{u-1} p_{n,m}(i) \cdot \sigma_{n,m}(u - i), $$$$ \sigma_{n,m}(u) = \sum_{u \operatorname{mod} d} \varepsilon_d d,\quad\textrm{where}\; \varepsilon_d = \begin{cases} 1, & \textrm{where}\; 1 \leq d \leq n, \\ 0, & \textrm{else}, \\ -1, & \textrm{where}\; m+1 \leq d \leq m+n. \end{cases} $$This implementation allows us to calculate the exact $p$-value much faster using $\mathcal{O}(u)$ memory (worst case $\mathcal{O}(nm)$).

Edgeworth Expansion

The Edgeworth expansion extends the normal approximation approach, which is based on the normal distribution $\mathcal{N}(\mu_U, \sigma_U^2)$ defined by the following parameters:

$$ \mu_U = \frac{nm}{2},\quad \sigma_U = \sqrt{\frac{nm(n+m+1)}{12}}. $$The $z$-score is calculated with the continuity correction:

$$ z = \frac{U - \mu_U \pm 0.5}{\sigma_U}, $$The $p$-value is defined as follows (assuming the Edgeworth expansion to terms of order $1/m^2$):

$$ p_{E7}(z) = \Phi(z) + e^{(3)} \varphi^{(3)}(z) + e^{(5)} \varphi^{(5)}(z) + e^{(7)} \varphi^{(7)}(z), $$where

$$ e^{(3)} = \frac{1}{4!}\left( \frac{\mu_4}{\mu_2^2} - 3 \right),\quad e^{(5)} = \frac{1}{6!}\left( \frac{\mu_6}{\mu_2^3} - 15\frac{\mu_4}{\mu_2^2} + 30 \right),\quad e^{(7)} = \frac{35}{8!}\left( \frac{\mu_4}{\mu_2^2} - 3 \right)^2, $$$$ \mu_2 = \frac{nm(n+m+1)}{12}, $$$$ \mu_4 = \frac{mn(m+n+1)}{240} \bigl( 5(m^2 n + m n^2) - 2(m^2 + n^2) + 3mn - (2m + n) \bigr), $$$$ \begin{split} \mu_6 = \frac{mn(m+n+1)}{4032} \bigl( 35m^2 n^2 (m^2 + n^2) + 70 m^3 n^3 - 42 mn (m^3 + n^3) - 14 m^2 n^2 (m + n) +\\ + 16 (m^4 + n^4) - 52 mn (m^2 + n^2) - 43 m^2 n^2 + 32 (m^3 + n^3) +\\ + 14 mn (m + n) + 8 (m^2 + n^2) + 16 mn - 8 (m + n) \bigr), \end{split} $$$$ \varphi^{(k)}(z) = -\varphi(z) H_k(z), $$$$ H_3(z) = z^3 - 3z, $$$$ H_5(z) = z^5 - 10z^3 + 15z, $$$$ H_7(z) = z^7 - 21z^5 + 105z^3 - 105z. $$See also: fix1955, porter1974, ury1977, bean2004.

Approximation Error

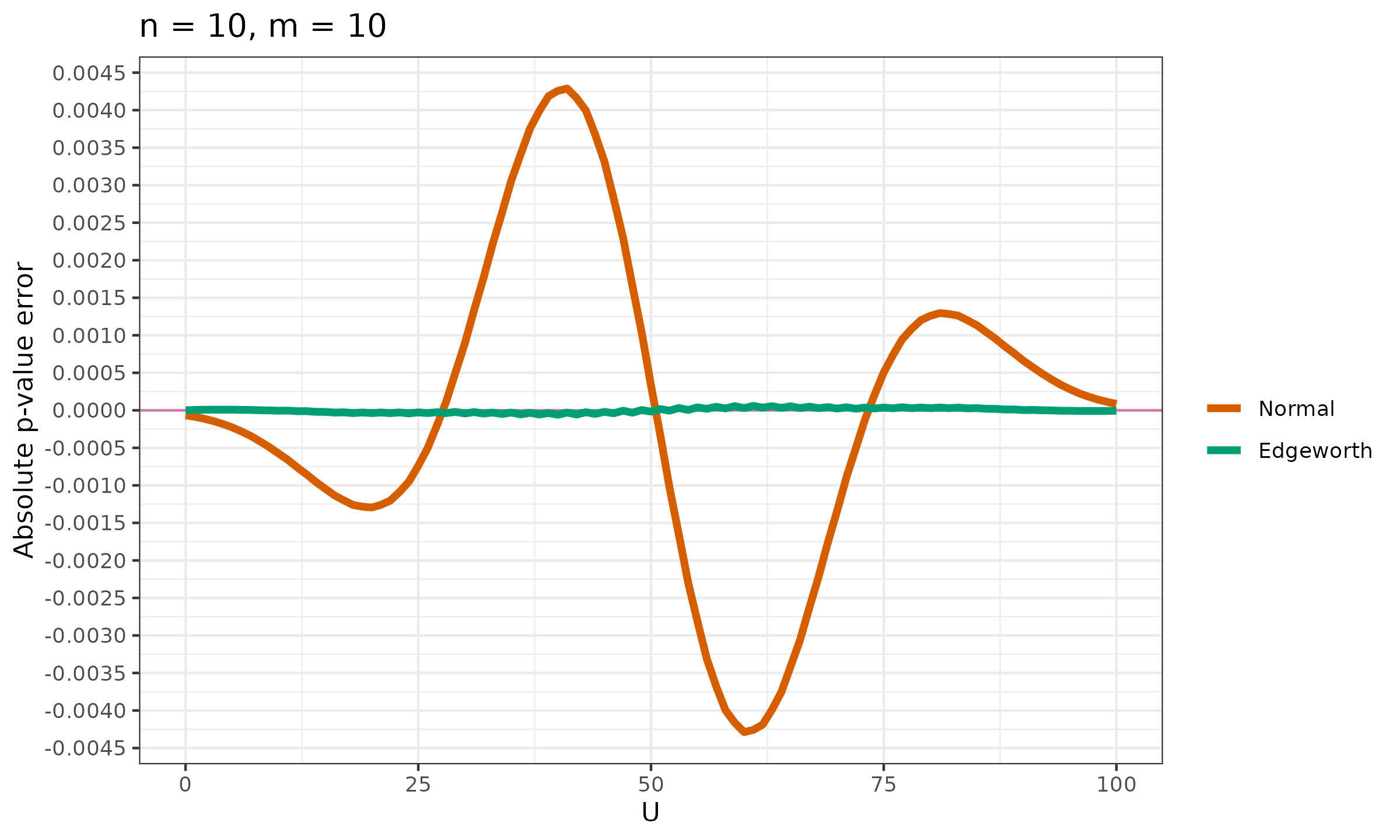

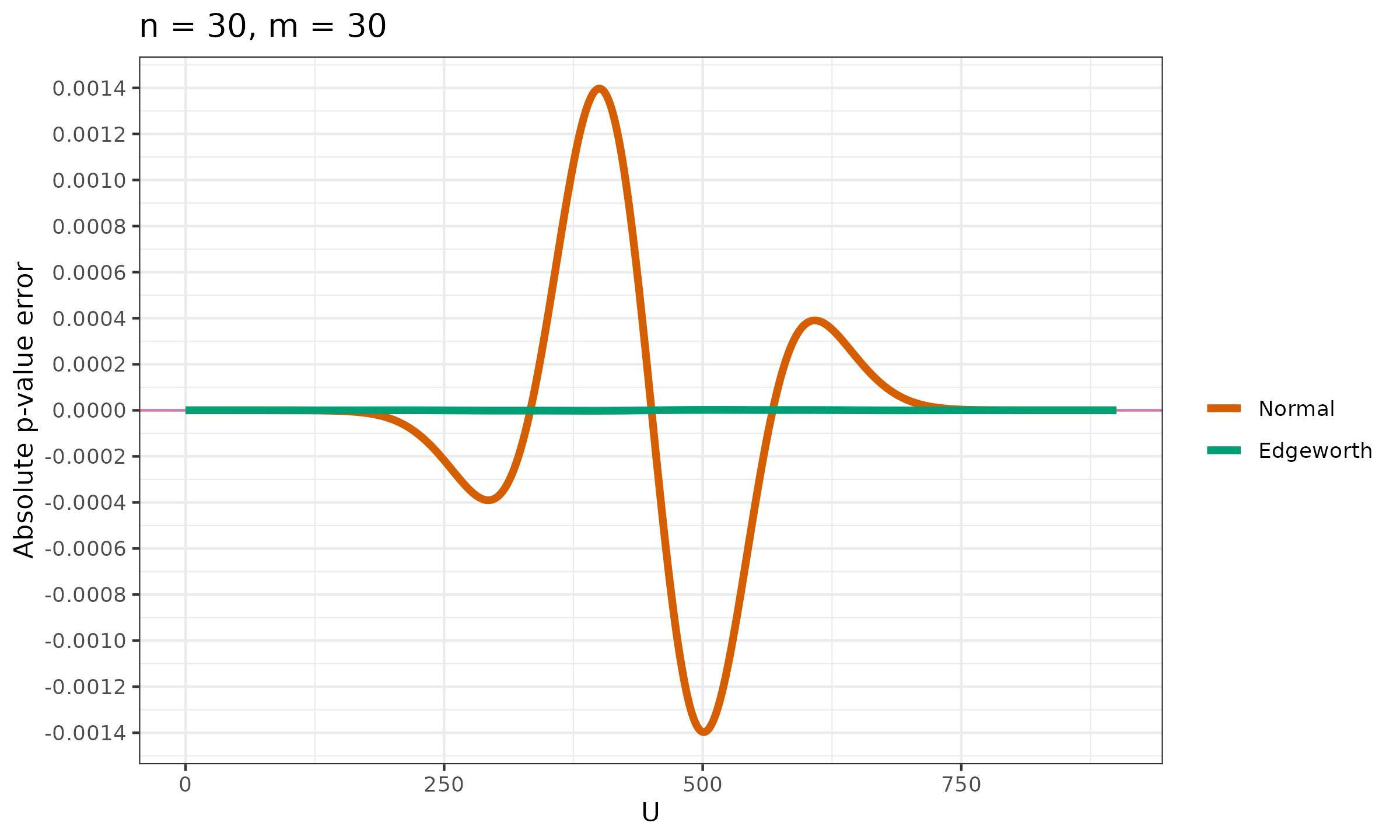

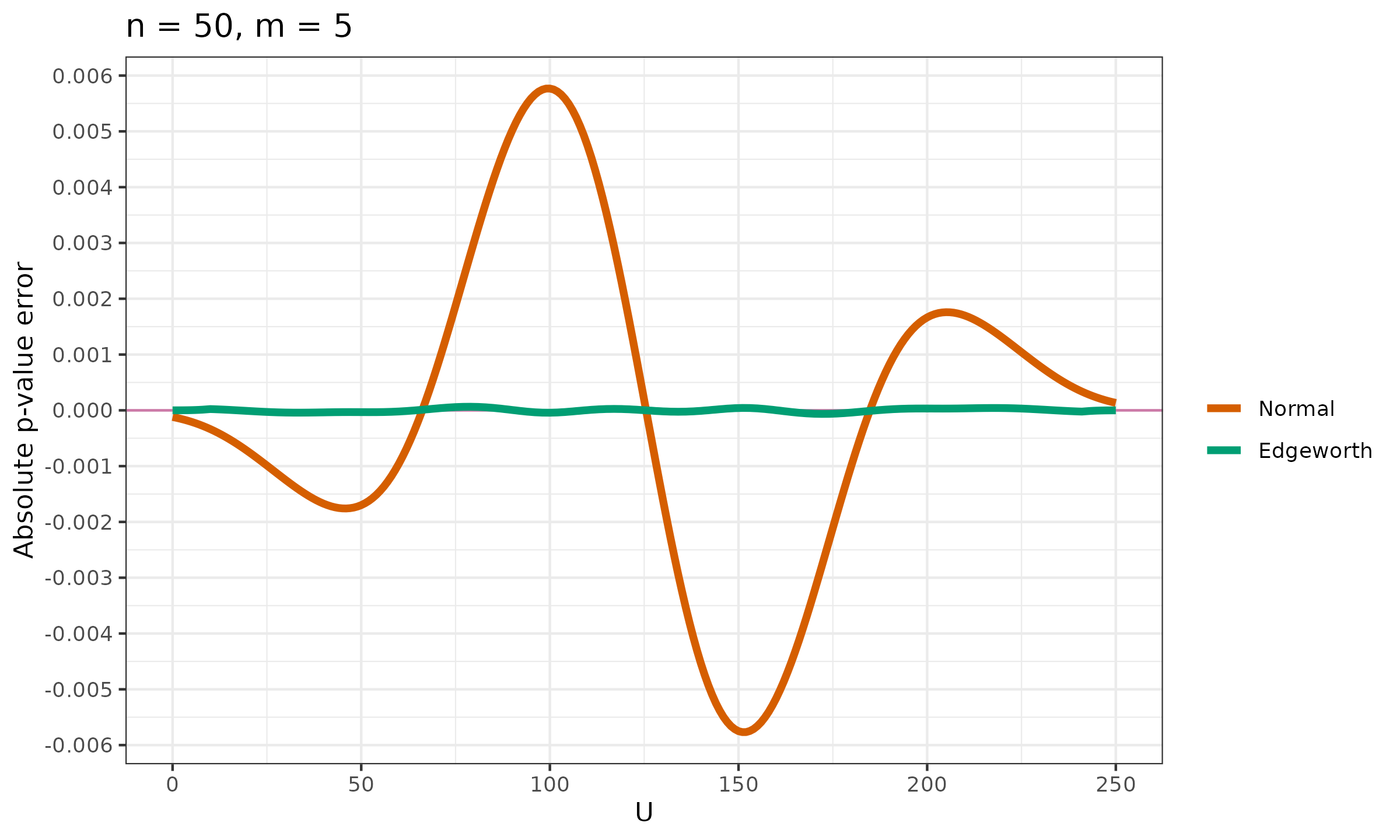

In the below figures, we compare the absolute $p$-value error of the normal approximation and the Edgeworth expansion against the exact value.

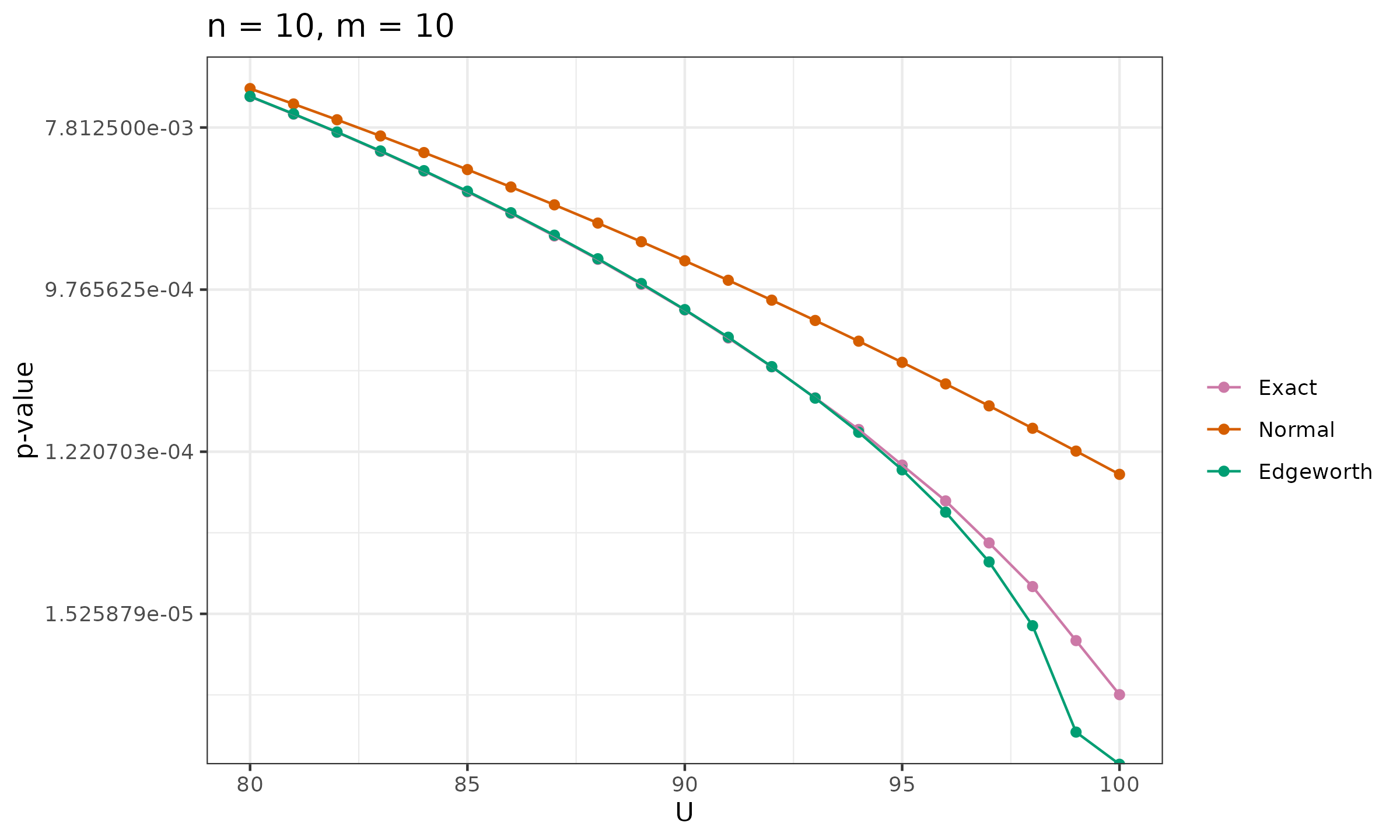

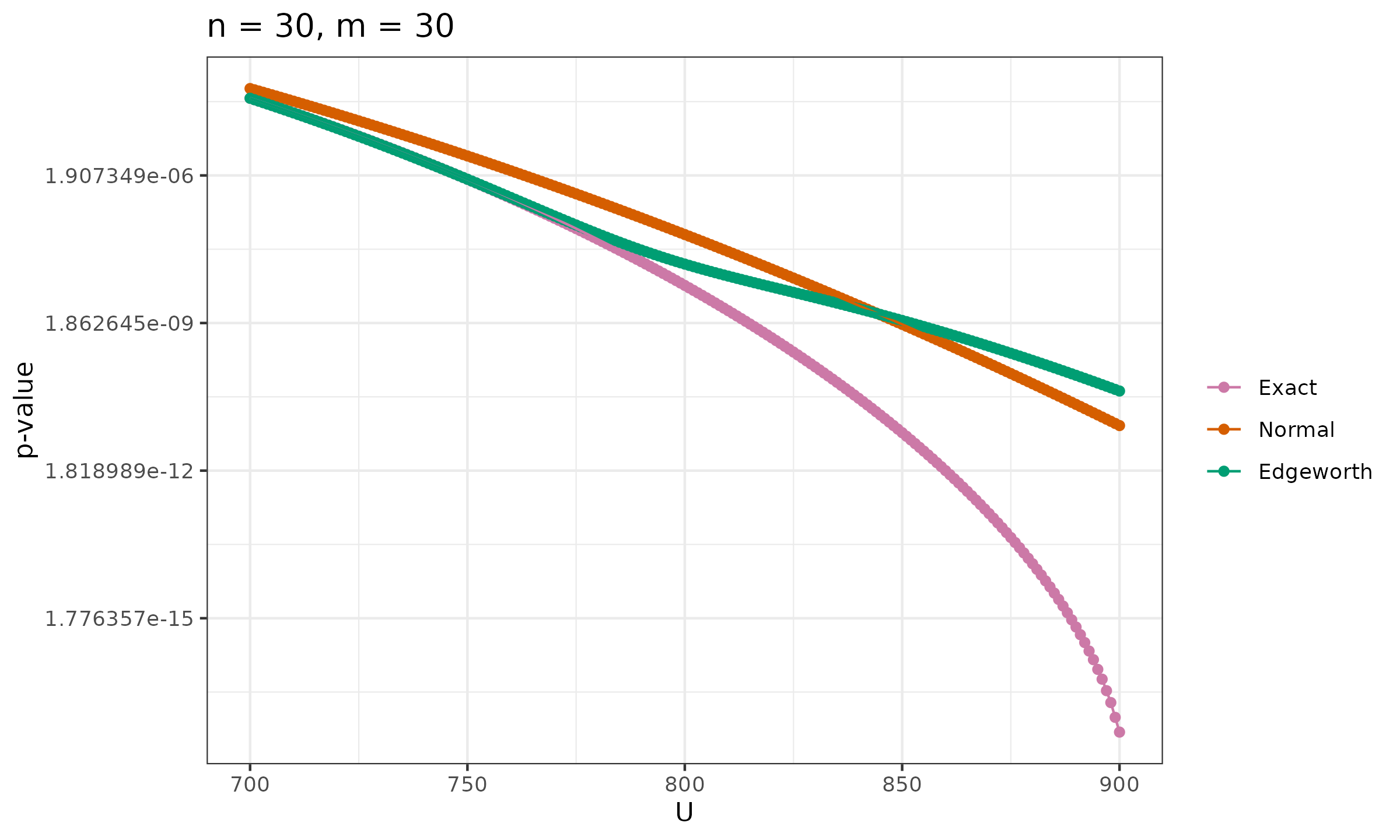

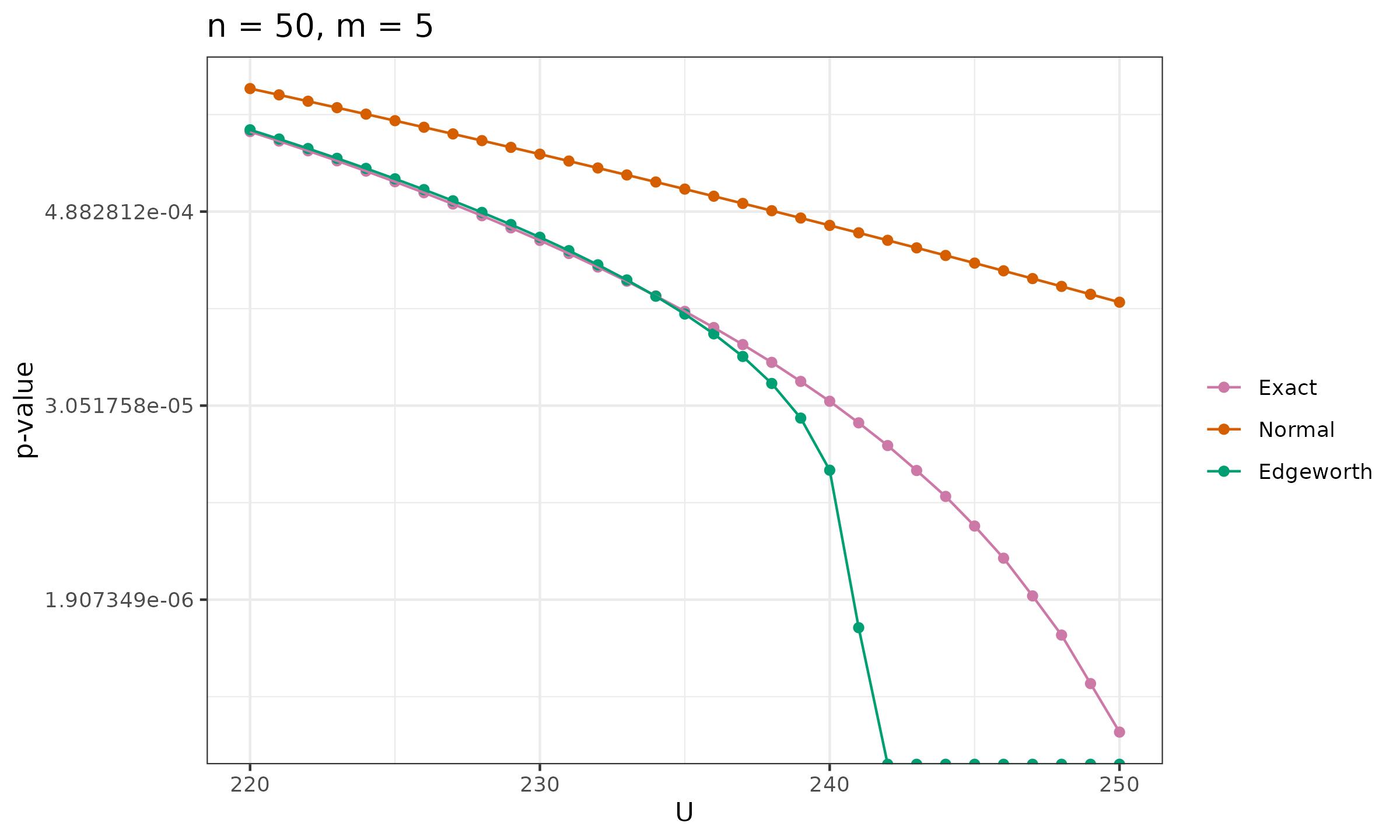

In the below figures, we show the estimated $p$-values in the tails on the logarithmic scale.

Implementation

Remarks on the below implementation:

- This is a reference implementation in C# presented to illustrate the algorithm using C-like syntax.

- For clarity and simplicity, the corner case handling is omitted. We implicitly assume that $n, m \geq 1$ and $0 \leq u \leq nm$.

- It is possible to introduce advanced performance optimization, but the presented implementation focuses on readability.

- Implementations of

LoefflerExactPValue,EdgeworthApproxPValue,ClassicExactPValue, andNormalApproxPValueare presented for the “greater” alternative hypothesis. - Presented

RunTest(x, y, threshold, alternative)is consistent withwilcox.test(x, y, alternative, mu)from R. - We neglect the tie correction.

- The discussed methods rely on the value of binomial coefficients $C_n^k$ and the CDF of the standard normal distribution $\Phi$. For completeness, we provide simple approximations of these functions.

using static Math;

public class MannWhitney

{

public static double LoefflerExactPValue(int n, int m, int u)

{

if (u <= 0) return 1.0;

u--;

int[] sigma = new int[u + 1];

for (int d = 1; d <= n; d++)

for (int i = d; i <= u; i += d)

sigma[i] += d;

for (int d = m + 1; d <= m + n; d++)

for (int i = d; i <= u; i += d)

sigma[i] -= d;

double[] p = new double[u + 1];

p[0] = 1;

for (int a = 1; a <= u; a++)

{

for (int i = 0; i < a; i++)

p[a] += p[i] * sigma[a - i];

p[a] /= a;

}

double cdf = p.Sum() / BinomialCoefficient(n + m, m);

double pValue = 1 - cdf;

return Min(Max(pValue, 0), 1);

}

public static double EdgeworthApproxPValue(int n, int m, int u)

{

double mu = n * m / 2.0;

double su = Sqrt(n * m * (n + m + 1) / 12.0);

double z = (u - mu - 0.5) / su;

double phi = StandardNormalPdf(z);

double Phi = StandardNormalCdf(z);

double mu2 = n * m * (n + m + 1) / 12.0;

double mu4 =

n * m * (n + m + 1) *

(0

+ 5 * m * n * (m + n)

- 2 * (Pow(m, 2) + Pow(n, 2))

+ 3 * m * n

- 2 * (n + m)

) / 240.0;

double mu6 =

n * m * (n + m + 1) *

(0

+ 35 * Pow(m, 2) * Pow(n, 2) * (Pow(m, 2) + Pow(n, 2))

+ 70 * Pow(m, 3) * Pow(n, 3)

- 42 * m * n * (Pow(m, 3) + Pow(n, 3))

- 14 * Pow(m, 2) * Pow(n, 2) * (n + m)

+ 16 * (Pow(n, 4) + Pow(m, 4))

- 52 * n * m * (Pow(n, 2) + Pow(m, 2))

- 43 * Pow(n, 2) * Pow(m, 2)

+ 32 * (Pow(m, 3) + Pow(n, 3))

+ 14 * m * n * (n + m)

+ 8 * (Pow(n, 2) + Pow(m, 2))

+ 16 * n * m

- 8 * (n + m)

) / 4032.0;

double e3 = (mu4 / Pow(mu2, 2) - 3) / 24;

double e5 = (mu6 / Pow(mu2, 3) - 15 * mu4 / Pow(mu2, 2) + 30) / 720;

double e7 = 35 * Pow(mu4 / Pow(mu2, 2) - 3, 2) / 40320;

double f3 = -phi * H3(z);

double f5 = -phi * H5(z);

double f7 = -phi * H7(z);

double cdf = Phi + e3 * f3 + e5 * f5 + e7 * f7;

double pValue = 1 - cdf;

return Min(Max(pValue, 0), 1);

double H3(double x) => Pow(x, 3) - 3 * x;

double H5(double x) => Pow(x, 5) - 10 * Pow(x, 3) + 15 * x;

double H7(double x) => Pow(x, 7) - 21 * Pow(x, 5) + 105 * Pow(x, 3) - 105 * x;

}

public static double ClassicExactPValue(int n, int m, int u)

{

u--;

int q = (int)Floor(u + 1e-9);

int nm = Max(n, m);

double[,,] w = new double[nm + 1, nm + 1, q + 1];

for (int i = 0; i <= nm; i++)

for (int j = 0; j <= nm; j++)

for (int k = 0; k <= q; k++)

{

if (i == 0 || j == 0 || k == 0)

w[i, j, k] = k == 0 ? 1 : 0;

else if (k > i * j)

w[i, j, k] = 0;

else if (i > j)

w[i, j, k] = w[j, i, k];

else if (j > 0 && k < j)

w[i, j, k] = w[i, k, k];

else

w[i, j, k] = w[i - 1, j, k - j] + w[i, j - 1, k];

}

double denominator = BinomialCoefficient(n + m, m);

double p = 0;

if (q <= n * m / 2)

{

for (int i = 0; i <= q; i++)

p += w[n, m, i];

}

else

{

q = n * m - q;

for (int i = 0; i < q; i++)

p += w[n, m, i];

p = denominator - p;

}

double cdf = p * 1.0 / denominator;

double pValue = 1 - cdf;

return Min(Max(pValue, 0), 1);

}

public static double NormalApproxPValue(int n, int m, int u)

{

double mu = n * m / 2.0;

double su = Sqrt(n * m * (n + m + 1) / 12.0);

double z = (u - mu - 0.5) / su;

double cdf = StandardNormalCdf(z);

double pValue = 1 - cdf;

return Min(Max(pValue, 0), 1);

}

// ------------------------------ The Test ------------------------------

public enum AlternativeHypothesis

{

TwoSides,

Less,

Greater

}

public enum Implementation

{

ClassicExact,

LoefflerExact,

NormalApprox,

EdgeworthApprox,

Auto

}

public static double RunTest(double[] x, double[] y, double threshold,

AlternativeHypothesis alternative, Implementation implementation = Implementation.Auto)

{

switch (alternative)

{

case AlternativeHypothesis.TwoSides:

{

double pValue1 = RunTestGreater(x, y.Select(v => v + threshold).ToArray(), implementation);

double pValue2 = RunTestGreater(y.Select(v => v + threshold).ToArray(), x, implementation);

double pValue = Min(Min(pValue1, pValue2) * 2, 1);

return pValue;

}

case AlternativeHypothesis.Less:

{

return RunTestGreater(y.Select(v => v + threshold).ToArray(), x, implementation);

}

case AlternativeHypothesis.Greater:

{

return RunTestGreater(x, y.Select(v => v + threshold).ToArray(), implementation);

}

default:

{

throw new ArgumentOutOfRangeException(nameof(alternative), alternative, null);

}

}

}

public static double RunTestGreater(double[] x, double[] y, Implementation implementation = Implementation.Auto)

{

int n = x.Length, m = y.Length;

double[] xy = new double[n + m];

for (int i = 0; i < n; i++)

xy[i] = x[i];

for (int i = 0; i < m; i++)

xy[n + i] = y[i];

int[] index = new int[n + m];

for (int i = 0; i < n + m; i++)

index[i] = i;

Array.Sort(index, (i, j) => xy[i].CompareTo(xy[j]));

double[] ranks = new double[n + m];

for (int i = 0; i < n + m;)

{

int j = i;

while (j + 1 < n + m && Abs(xy[index[j + 1]] - xy[index[i]]) < 1e-9)

j++;

double rank = (i + j + 2) / 2.0;

for (int k = i; k <= j; k++)

ranks[k] = rank;

i = j + 1;

}

double ux = 0;

for (int i = 0; i < n + m; i++)

if (index[i] < n)

ux += ranks[i];

ux -= n * (n + 1) / 2.0;

int u = (int)Round(ux);

if (implementation == Implementation.Auto)

implementation = n * m <= 10_000 ? Implementation.LoefflerExact : Implementation.EdgeworthApprox;

double pValue = implementation switch

{

Implementation.ClassicExact => ClassicExactPValue(n, m, u),

Implementation.LoefflerExact => LoefflerExactPValue(n, m, u),

Implementation.NormalApprox => NormalApproxPValue(n, m, u),

Implementation.EdgeworthApprox => EdgeworthApproxPValue(n, m, u),

_ => throw new ArgumentOutOfRangeException(nameof(implementation), implementation, null)

};

return pValue;

}

// ------------------------------ Helpers ------------------------------

private static double StandardNormalPdf(double x) => Exp(-x * x / 2) / Sqrt(2 * PI);

// ACM Algorithm 209: Gauss (Area under the Standard Normal Curve from -infinity to x)

// http://dl.acm.org/citation.cfm?id=367664

public static double StandardNormalCdf(double x)

{

double z;

if (Abs(x) < 1e-9)

z = 0.0;

else

{

double y = Abs(x) / 2;

if (y >= 3.0)

z = 1.0;

else if (y < 1.0)

{

double w = y * y;

z = ((((((((0.000124818987 * w

- 0.001075204047) * w + 0.005198775019) * w

- 0.019198292004) * w + 0.059054035642) * w

- 0.151968751364) * w + 0.319152932694) * w

- 0.531923007300) * w + 0.797884560593) * y * 2.0;

}

else

{

y -= 2.0;

z = (((((((((((((-0.000045255659 * y +

0.000152529290) * y - 0.000019538132) * y

- 0.000676904986) * y + 0.001390604284) * y

- 0.000794620820) * y - 0.002034254874) * y

+ 0.006549791214) * y - 0.010557625006) * y

+ 0.011630447319) * y - 0.009279453341) * y

+ 0.005353579108) * y - 0.002141268741) * y

+ 0.000535310849) * y + 0.999936657524;

}

}

return x > 0.0 ? (z + 1.0) / 2 : (1.0 - z) / 2;

}

public static double BinomialCoefficient(int n, int k)

{

if (n <= 65)

{

long[,] pascalTriangle = new long[n + 1, n + 1];

for (int i = 0; i <= n; i++)

{

pascalTriangle[i, 0] = 1;

for (int j = 1; j <= i; j++)

pascalTriangle[i, j] = pascalTriangle[i - 1, j - 1] + pascalTriangle[i - 1, j];

}

return pascalTriangle[n, k];

}

return Exp(FactorialLog(n) - FactorialLog(k) - FactorialLog(n - k));

}

public static double FactorialLog(double n) => GammaFunctionLogValue(n + 1);

public static double GammaFunctionLogValue(double x)

{

// For small x, the Stirling approximation has a noticeable error

// We resolve this problem using Gamma(x) = Gamma(x+1)/x

if (x < 1)

return StirlingApproximationLog(x + 3) - Log(x * (x + 1) * (x + 2));

if (x < 2)

return StirlingApproximationLog(x + 2) - Log(x * (x + 1));

if (x < 3)

return StirlingApproximationLog(x + 1) - Log(x);

return StirlingApproximationLog(x);

}

private static double StirlingApproximationLog(double x)

{

// Bernoulli numbers

const double b2 = 1.0 / 6, b4 = -1.0 / 30, b6 = 1.0 / 42, b8 = -1.0 / 30, b10 = 5.0 / 66;

// sum = sum(b[2*n] / (2n * (2n-1) * z^(2n-1)))

double series =

b2 / 2 / x +

b4 / 12 / (x * x * x) +

b6 / 30 / (x * x * x * x * x) +

b8 / 56 / (x * x * x * x * x * x * x) +

b10 / 90 / (x * x * x * x * x * x * x * x * x);

return x * Log(x) - x + Log(2 * PI / x) / 2 + series;

}

}